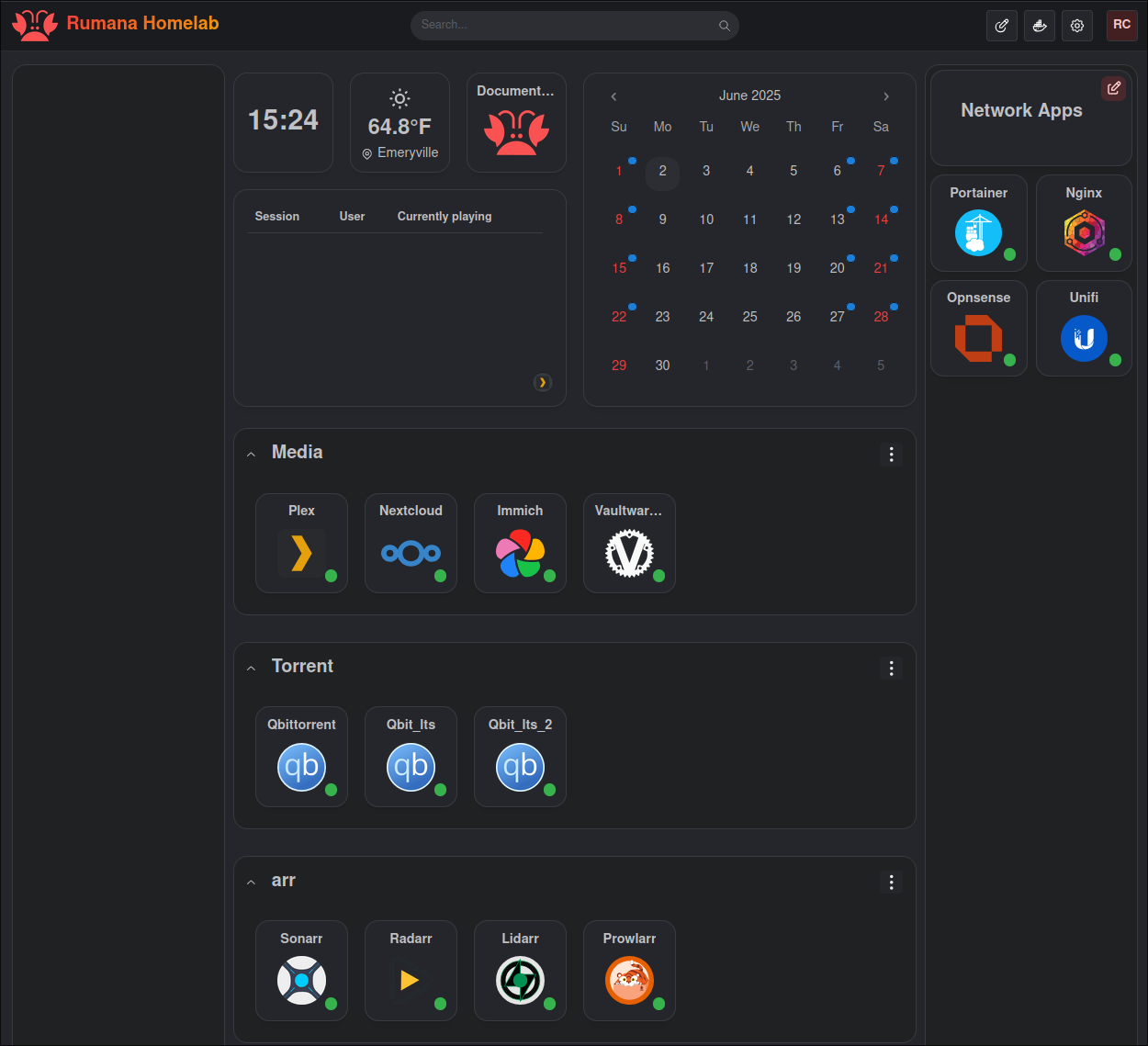

Why it exists

I wanted production-grade reliability at home, not just a pile of containers. The homelab runs on a two-node k3s cluster with GitOps from day one, so every change is reviewed, versioned, and self-healing. It keeps my media workflow, password management, AI experiments, and personal sites online.

Platform architecture

- GitOps: Argo CD app-of-apps reconciles infrastructure, platform services, and applications.

- Networking: Cloudflare front door, MetalLB for load balancing, and HAProxy ingress classes for public vs. restricted apps.

- Storage: Longhorn provides replicated PVCs with hourly snapshots and nightly NAS backups.

- AI stack: Migrated from Ollama/OpenWebUI to

llama.cpp+ LibreChat, backed by MongoDB and Qdrant so it scales cleanly across nodes.

Reliability upgrades

Critical services (HAProxy, CoreDNS, cert-manager, LibreChat, Vaultwarden, portfolio) run with

anti-affinity, PDBs, and multi-replica deployments. Test workloads in platform/test validate new

nodes and ingress paths before production apps land.

What’s next

Finishing the migration to dark-mode aware UI, expanding network policies, and standing up the LGTM observability stack once HA work settles.

Two-node k3s + Longhorn

k3s keeps the control plane lightweight while Longhorn replicates every PVC, giving me instant failover for HAProxy, LibreChat, Vaultwarden, and more.

Argo CD app-of-apps

Every platform service and application is reconciled from Git. The platform/test

workloads smoke-test new nodes before promotion.

LibreChat + llama.cpp

Swapped out Ollama for a multi-node friendly llama.cpp deployment backed by MongoDB and Qdrant so conversational memory survives node loss.

Ops snapshot

MetalLB advertises services directly to my home network, HAProxy terminates TLS sourced from cert-manager, and nightly Longhorn backups land on the NAS. The result is a homelab that feels like a production platform without losing tinkering power.